Adobe AI learns to spot the photo fakery Photoshop makes easy

Photoshop makes it easy to tamper with images, but neural networks could help you figure out which photos are trustworthy.

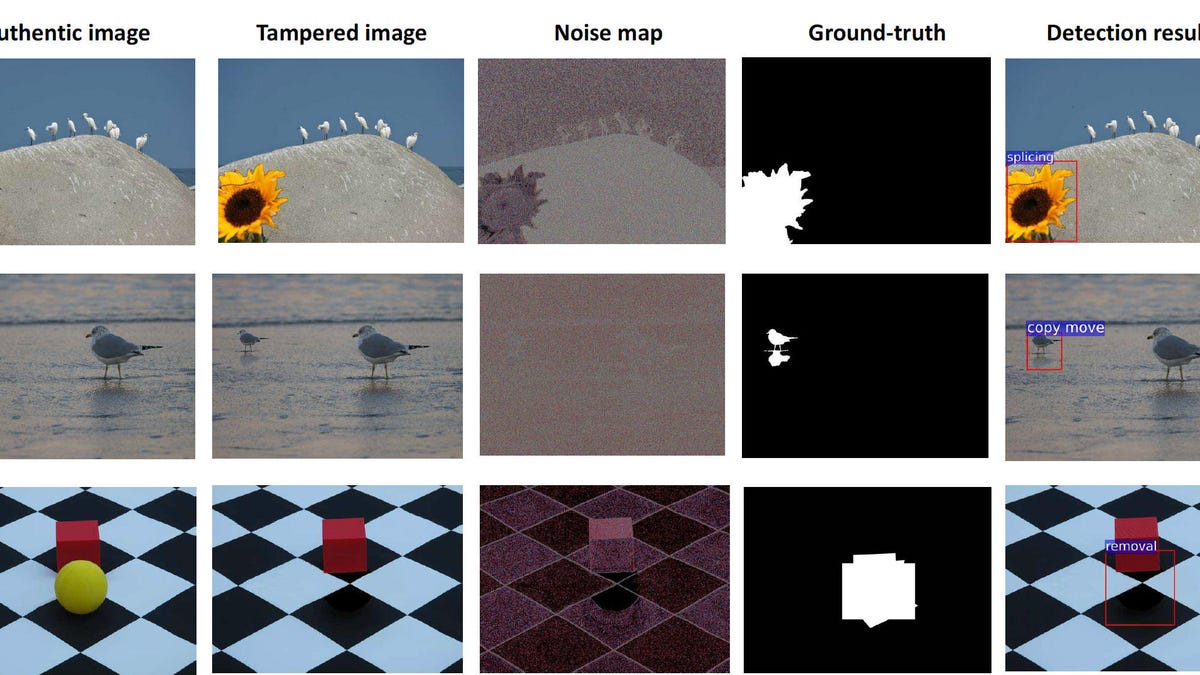

Trained with real-world tampered images, a neural network learns to spot telltale signs that a photo has been fooled with.

Photoshop fakery is getting more and more sophisticated, but Adobe Systems is using AI to detect when it's happened.

Plenty of photo editing is no big deal, but in some situations -- for example, photojournalism, viral photos of politicians or celebrities and forensic evidence used by law enforcement -- you might want a better idea of what's true or not.

Enter artificial intelligence -- specifically the neural network technology loosely based on human brains' ability to learn from real-world data, not rigid programming instructions. The technology has proven useful for detecting spam, flagging fraudulent credit card transactions, learning how to debate and understanding human speech. And it can spot signs of image editing, too -- specifically when noise speckles in one part of an image don't match another part or when there are unusual boundaries where new imagery has been spliced in.

"Even with careful inspection, humans find it difficult to recognize the tampered regions," said Adobe senior research scientist Vlad Morariu and colleagues from the University of Maryland in a Computer Vision Research paper. "Our method not only detects tampering artifacts but also distinguishes between various tampering techniques."

The machine learning techniques of neural networks deliver impressive results, but the quality of results depends heavily on the quality of the data on which a neural network is trained. In today's training processes, data is carefully labeled in advance so the computer can recognize the patterns. That's a lot of work.

"Using tens of thousands of examples of known, manipulated images, we successfully trained a deep learning neural network to recognize image manipulation," Morarium said in an Adobe announcement of the technology Friday.

CNET Magazine: Check out a sample of the stories in CNET's newsstand edition.

'Hello, humans': Google's Duplex could make Assistant the most lifelike AI yet.