In October 1969, humanity had put a man on the moon, but machines that could understand words seemed out of reach. “Speech recognition has glamor,” wrote J.R. Pierce in a paper published in the Acoustical Society of America’s journal that month. “Funds have been available. Results have been less glamorous.”

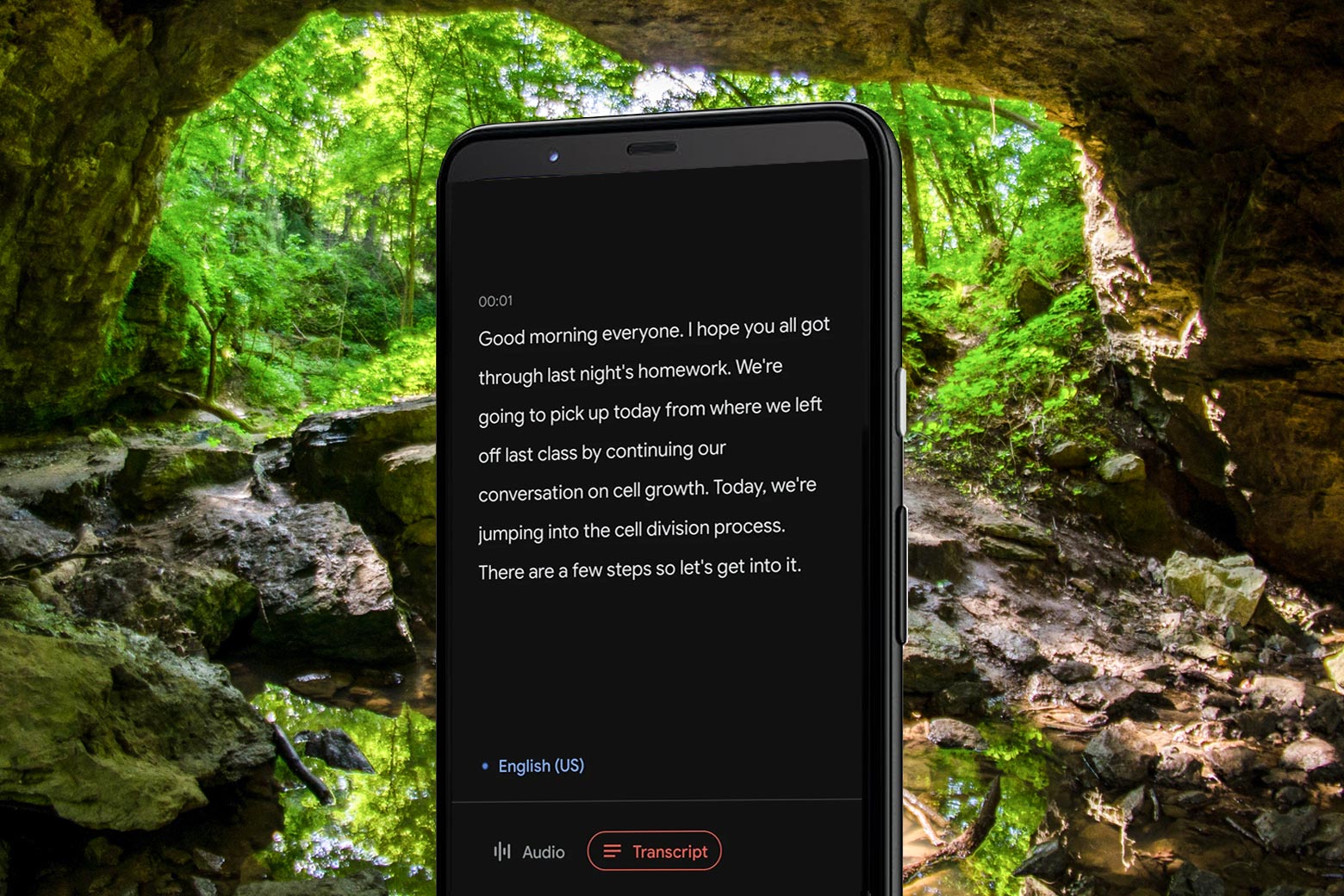

Fifty years later, Google announced the release of its new phone, the Pixel 4. Built into the Pixel’s Recorder app is a technology that entire banks of computers were once powerless to achieve: voice recognition. In an ad for the new feature, the narrator’s script appears on the screen as the narrator’s reading it: “What if it could transcribe the words as they were being said?” she says. Then, she shows off the app’s ability to recognize a few esoteric words: “Purple. Sibilance. Pecorino.” And, the ad notes, Recorder categorizes sounds into speech, music, and applause; allows you to search transcripts for specific words; and, most remarkably, works entirely offline, so you can record anywhere, “like in a cave. Not that you would.”

Here’s the thing: I would. And I have—or at least, I’ve tried to.

Last April, I was on a reporting trip just outside Longyearbyen, Norway, the world’s northernmost human settlement. I was shadowing a scientist and a doctor studying a group of women skiing to the North Pole, and the three of us decided to go on a tour where we drove dogsleds to an ice cave. Between the tour group and guides, there were around two dozen people taking turns descending into the cave and walking to its edge. The cave had been slowly melting for years, said the guides, and they anticipated it would soon collapse entirely, given the Arctic’s rapid warming. I was eager to capture their anecdotes, so I turned on my recorder.

The result: a 90-minute recording that contained maybe 10 minutes of actual speech, while the rest was the sound of my recorder rubbing against my shirt as I walked around said cave. I would have killed to have an automatic transcription of what happened in there, identifying when people were speaking versus that terrible shirt-rubbing sound.

If there’s one thing I hate more than the hype around new phone releases, it’s transcribing recordings. Journalists spend countless hours interviewing people, and it can take just as long to comb over those recordings for the juicy bits. Some offload that work to professional transcribing services, which can cost more than $1 a minute. Artificial intelligence transcription services like Trint, Otter, and Temi have come onto the scene in the past few years. Each offers some free transcription, then charges a few cents per minute or a monthly subscription fee. The results are often mixed, especially if your interviews include any jargon, accents, or background noise; journalist Emily Underwood (or, as Trint heard, “Emily Underbite”) turned some of A.I. transcription’s mistakes into hilarious and surprisingly incisive found poems. But even those services don’t transcribe automatically—you must send in your audio files and wait at least a few minutes to see the results.

Any dark-magic device that could transcribe reasonably well in real time could be a godsend to working journalists, or to people with hearing impairments, or to college students recording lectures, or to people with fallible memories—that is, just about all of us.

To assess Recorder’s powers, Google provided me with a new Pixel 4, the only device on which the transcription feature is available. I recorded audio in a range of scenarios and compared Recorder’s live transcription to Otter’s transcripts of the same files. (I chose Otter because it provides the most transcription for free: up to 600 minutes a month.) I can confirm that Recorder’s live transcriptions were happening offline; I had no SIM card to use in my loaned Pixel 4, so it couldn’t have been processing using cellular data, and I was recording in places where I’d turned the Wi-Fi function off or I didn’t have access to Wi-Fi at all. I tested in four scenarios, which varied in sound quality and background noise. (Unfortunately, I was not able to make it to a cave for this.)

My first recording was what I expected would be easiest for both A.I. services to transcribe accurately: me—a person with a pretty standard American accent—talking directly into the mic, with no background noise. The transcripts from Recorder and Otter were nearly identical and got everything right minus a nickname I have for my dog. The results illuminated one major difference between the two services: Recorder does not differentiate between multiple speakers, whereas Otter does—or at least tries to. In this recording, Otter thought I was four different speakers, I’m guessing at least partly because I switched between human-directed speech—asking my husband if he wanted to say anything—and dog-directed speech. (If you’ve had a pet or even just watched enough pet videos online, you’re probably familiar with the higher-pitched, lilting pet voice.) My verdict? A draw between Otter and Recorder.

The next A.I. test was slightly harder: an interview I’d done in a Starbucks on a handheld recording device. Because Recorder doesn’t allow you to upload files for transcription, I just played the recording aloud on my computer. Here, Google and Otter performed comparably; both struggled with identifying less common proper names and misidentified certain words or phrases, though it seemed like Otter took more liberties with guessing. For example, whereas Google swapped “nation” for “intimidation,” Otter concluded that the phrase “before we talked last” was “as a performer, you taught a class.” Overall, Otter’s transcript was easier to follow because it attributes lines and phrases to different speakers. Though that segmentation is far from accurate, even the rough transcript helped jog my memory for what questions I was asking the interviewee, and was generally good at capturing conversational turns between the interviewee and me. Verdict: a second draw.

Later that night, I brought my phone out with me to record in a scenario I expected both Recorder and Otter to fail in: a very loud bar. I could barely hear my friend Mike next to me, and I asked him to say something into the phone. Recorder could only make out my first few words and none of Mike’s, but Otter rendered most of the clip with decent accuracy. Verdict: Otter was the clear winner here.

The next day, I brought the Pixel with me to a book talk in an auditorium. I was sitting in the second-to-last row of a renovated church basement that holds a maximum of about 250 people, so the sound quality could have been better, but Recorder and Otter both produced workable transcripts. Recorder, overall, produced a more readable transcript, with more consistent punctuation, whereas Otter’s was a jumble of phrases all stamped as separate “speakers,” even though a single speaker was monologuing. Both dropped key phrases but gave enough detail that it helped me remember moments from the talk, allowing me to go back and find specific segments. Verdict: another wash.

Otter’s one claim to fame was outperforming Recorder in a noisy environment, but overall I was impressed at how well Recorder transcribed using only tools built into the phone. Before beginning this very unscientific experiment, I had no real hypotheses about how the tech might perform. On the one hand, one might expect Otter to outperform Google because it, theoretically, has more processing power; unlike Recorder, which is built into the Pixel and runs offline, Otter has the luxury of access to what could be more complex analyses. But on the other hand, Google is Google, and with the tens of millions of phones and smart speakers it’s sold worldwide, it has the potential to analyze a ton of data, which would serve as excellent training for any A.I.

That data appears to have served Google well. In a couple of papers on automatic speech recognition, Google engineers report that their projects’ training data comes from millions of Google voice-search and dictation clips, representing tens of thousands of hours of speech. A Google representative said this was the same training data used in Google’s other voice recognition technologies. I asked for more specifics, and several days ago the rep offered to provide a response from a Pixel engineer, but I hadn’t heard by the time of publication. (We’ll update this piece if Google responds.)

There’s no doubt that Google will continue building on this technology. For one, the transcription function is only available in English at the moment, and a Google representative tells me they’re not ready to share anything about their plans beyond that they hope to expand into other languages. But what I most wanted to know is whether our recordings might become training fodder to build the next versions of Recorder. It would make sense that the best way to improve the app would be to feed it more data from the app. Google also has not yet responded to my question about whether Recorder data will be used, but based on the Pixel’s default settings, it seems like Google’s trying to “not be evil.” When opening the app for the first time, the user sees a gentle reminder: “Record respectfully around others.”

The big privacy question about Recorder is whether recorded data leaves the phone, says Jessica Vitak, an associate professor at the University of Maryland’s College of Information Studies. Once you upload files—as you must for analysis by other A.I. transcription companies—sensitive data can be compromised, either while files are being transferred or while sitting in storage on some server as part of a training data set.

And after the public became aware of (and then concerned about) how smart speaker and Google assistant voice data was being collected, the company apologized and announced new initiatives toward transparency. So it’s not surprising that Google has made a concerted effort to advertise that Recorder stores files directly on your phone and that the transcription feature works offline. After making the first recording, the app tells you that recordings are only saved on your phone and that any backup (like linking to Google Drive) requires action.

Judging by the app’s default security settings, Pixel allows Recorder to access your microphone (for obvious reasons) but not your location. It’s unclear exactly what turning on location services would do for the user—as Vitak told me, if you’re recording something, you probably already know where you are—and, given what we know about how your location can give away lots about your identity, it seems wisest to leave that setting off.

So the Recorder works, and the privacy settings don’t raise any immediate red flags. But there are other things to consider if you’re thinking about buying the phone (in part) for its transcription abilities. For instance, Recorder doesn’t appear to be compatible with any other apps that use the microphone, like Google Voice or Hangouts, so recording a call—often what I’d need the app to do—doesn’t seem possible. And then there’s the price: The Pixel 4 starts at $799. You’d have to transcribe a lot of material to hit that price tag in transcription fees, but perhaps the Pixel’s added layer of privacy is worth it.

Future Tense is a partnership of Slate, New America, and Arizona State University that examines emerging technologies, public policy, and society.